Hiring puzzles can quietly favor a certain type of candidate: 10 examples

A widely cited meta-analysis by Schmidt & Hunter, summarized clearly in Plum’s breakdown of their findings, shows that general mental ability (GMA) tests consistently rank among the strongest predictors of job performance across roles. The same body of research shows that unstructured interviews and less standardized methods have significantly lower predictive validity. In other words, structured assessments tied to underlying reasoning outperform impression-based or novelty-driven evaluation techniques. That distinction matters because many hiring puzzles fall into a gray area: they feel objective, but without validation, they may not measure the constructs most closely tied to long-term success.

If hiring is meant to be evidence-based, then the question is not whether puzzles are entertaining or memorable. The question is whether they have the same predictive grounding as structured cognitive measures, which have been shown in large-scale meta-analyses to correlate with real job performance.

When Puzzle Prestige Becomes a Proxy for a Major

Many elite tech firms once popularized puzzles like “How many piano tuners in Chicago?” not because the questions mapped to coding work, but because they signaled a certain problem‑solving identity. This echoes what Stanovich & West, cognitive scientists, call “epistemic authority”: the idea that someone who solves abstract logical problems quickly is inherently “smart.”

The hidden assumption is that chess‑like reasoning translates directly into job performance, yet research on job performance by Schmidt and Hunter shows general cognitive ability matters most, not the ability to chase contrived riddles. An informed critic would note that such logic puzzles are learnable through practice, meaning access to prep resources skews toward wealthier applicants, and that they advantage people socialized into analytical norms, disadvantaging otherwise capable but differently trained thinkers.

A better practice is structured work samples tied to real tasks, which meta‑analyses show have higher predictive validity than abstract puzzles.

When Geometry Trumps Judgment

Classic examples include mental rotation tasks or geometry-based logic challenges that appear in STEM-focused interviews. While these exercises are engaging, they disproportionately reward candidates with backgrounds in engineering, physics, or mathematics, subtly skewing hiring toward a narrow educational demographic.

Historical analysis of SAT scores shows similar patterns: students from liberal arts or social sciences were disadvantaged in spatially intensive sections despite excelling in reasoning and analysis. Companies that use these puzzles often overlook how professional judgment, ethical reasoning, and strategic thinking are critical to decision-making and cannot be measured through abstract geometric manipulation.

When Puzzles Speak a Local Idiom

Puzzles that incorporate references to Star Trek warp speeds or The Hitchhiker’s Guide to the Galaxy improbably assume global cultural fluency. This mirrors the broader sociolinguistic critique of cultural capital (Bourdieu, 1991): people with specific educational or cultural privilege are more comfortable navigating embedded references.

Everyone enjoys a fun reference, but that ignores documented performance gaps among non‑native speakers and international candidates, who expend cognitive bandwidth on decoding context before solving the core problem.

An alternative is to frame puzzles in universally accessible terms, e.g., by describing a problem in plain terms without culturally specific markers, or, better yet, by tying puzzles to job‑relevant scenarios that transcend pop culture.

When the Puzzle Is a Proxy for Past Access

In the early 2000s, a wave of software companies began including puzzles requiring mastery of Microsoft Excel macros or SQL queries in their interview pipelines. The intended goal was simple: test practical competence. But in practice, the puzzles favored applicants who had prior access to specific educational or professional resources.

Candidates trained at elite boot camps or universities often had an advantage, while equally capable individuals without such exposure struggled. Over time, companies realized that evaluating core problem-solving principles, such as data interpretation and logical structuring, provided a more reliable measure of potential than memorizing tools.

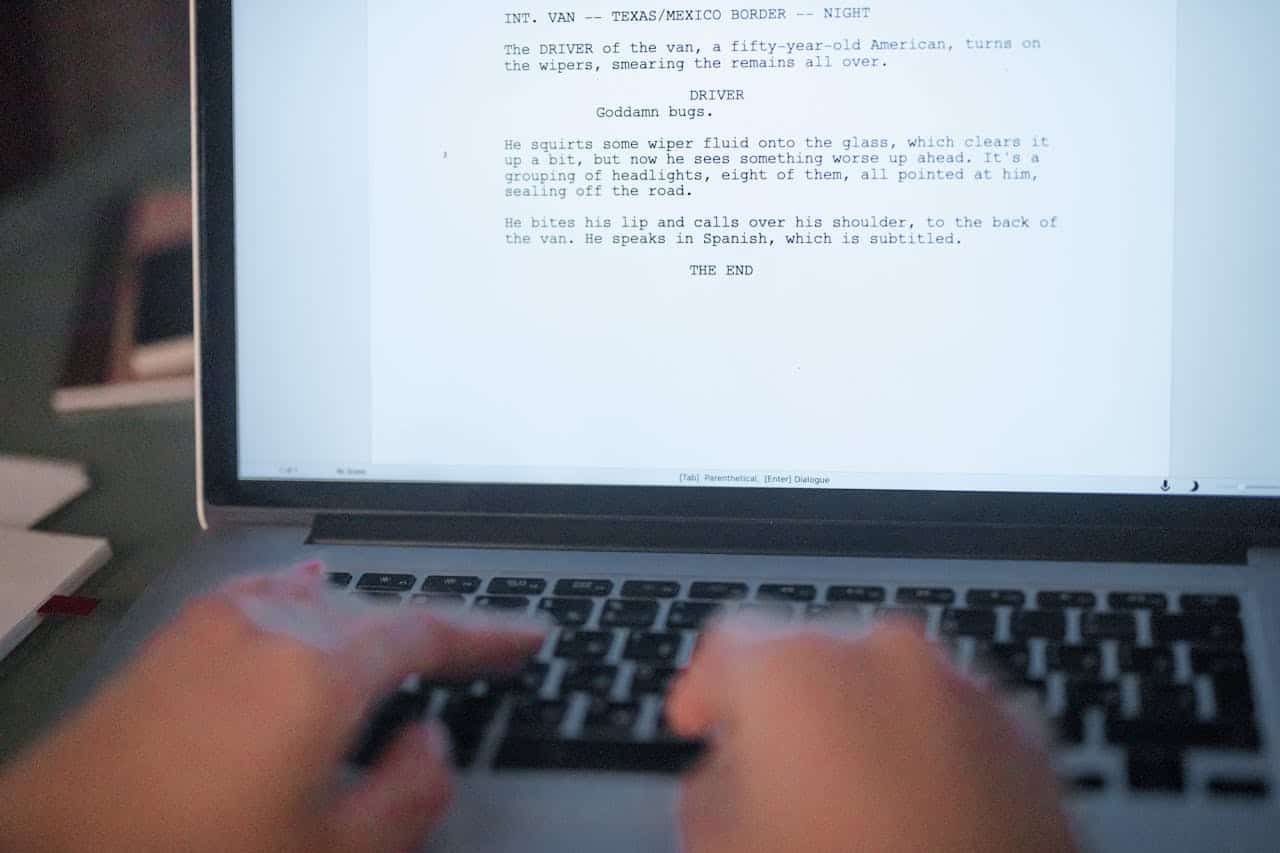

The Obscure Logic Obstacle

Many tech firms initially used puzzles that tested abstract thinking, such as lateral-thinking riddles, to evaluate job candidates. These puzzles often reward candidates who are comfortable with abstraction and are accustomed to solving riddles for fun. However, this type of testing fails to reflect the real problem-solving challenges employees face in the workplace.

In contrast to the isolated nature of lateral thinking puzzles, real-world problems typically require iterative, context-dependent reasoning and judgment in the face of uncertainty. Over time, many companies realized that such abstract puzzles did not align with the actual challenges faced in the workplace. As a result, the trend has shifted toward more realistic, project-based assessments that simulate authentic job tasks.

These simulations better assess how a candidate will perform in the role by focusing on practical, job-relevant scenarios rather than abstract, contrived problems disconnected from actual work.

When Wordiness Masks the Task

Some companies think complex phrasing makes a problem deeper. Instead, it can be a reading comprehension test masquerading as a logic challenge. This conflation is highlighted in construct validity research: if a measure inadvertently taps skills other than the intended ones, its results become uninterpretable.

The explicit premise that linguistic sophistication shows analytical depth conflates language mastery with reasoning ability. A counterpoint is that verbosity tests patience, not problem‑solving; another is that it systematically underestimates candidates with varied language backgrounds.

A fairer approach is to separate comprehension from reasoning. Use plain language descriptions and, if needed, assess communication separately in other parts of the process.

Timed Puzzles Favor Quick, Not Deep, Thought

Puzzles with harsh time limits appeal to the myth that real work happens under fire. Yet research on decision making, including Kahneman’s Thinking, Fast and Slow, differentiates fast, intuitive judgment from careful deliberation, both valuable but distinct.

The assumption here is that fast equals better. Another objection is that timed conditions disproportionately stress neurodivergent thinkers, who research shows often excel when pace isn’t penalized.

Instead of clock‑based cutoffs, assess the quality of reasoning with a reasonable time so that thoughtful iteration, not reflex speed, drives evaluation.

When Puzzles Assume One Cultural Logic

Many traditional hiring puzzles are based on cultural assumptions, such as language subtleties, idiomatic phrasing, or assumed interpersonal behaviors. These puzzles often assume that all candidates will approach problems from the same cultural perspective. This lack of cultural sensitivity can disadvantage candidates from diverse backgrounds who may not share the same cultural logic or references.

Firms that use puzzles based on narrow cultural assumptions may inadvertently create barriers to entry for talented individuals who think differently or have been socialized in different cultural contexts.

Research shows that companies that integrate multi-perspective scenario analysis, in which candidates from diverse backgrounds are invited to interpret and solve problems from multiple angles, often achieve more robust and innovative outcomes. By broadening the scope of puzzle scenarios to include diverse perspectives, companies can assess a wider range of cognitive abilities and ensure a more inclusive hiring process.

Penalizing Non‑Linear Thinkers

Many hiring puzzles are designed with a single correct answer in mind, which inadvertently penalizes non-linear thinkers who may approach the problem from different angles. These puzzles prioritize convergence on a singular solution rather than encouraging creative exploration of multiple solutions. In contrast, real-world problems often require thinking outside the box and generating multiple possible solutions.

Companies that focus solely on one correct answer may miss out on candidates who bring diverse problem-solving approaches. A more inclusive approach would be to present open-ended problems and evaluate the quality and logic of reasoning across multiple potential solutions. This approach aligns with design-thinking practices, which emphasize exploring various ways to solve a problem and recognizing that multiple valid solutions may exist.

By broadening the evaluation criteria, companies can attract a wider range of problem-solving thinkers.

When Point Systems Privilege Playful Problem Identity

Gamified puzzles and simulation exercises emerged from behavioral science research on motivation, drawing on principles outlined in Richard Ryan and Edward Deci’s Self-Determination Theory. Leaderboards, point systems, and metric optimizations make assessments feel like games, rewarding engagement, pattern recognition, and competitive instincts.

The phenomenon of “play identity” shows that individuals respond differently to game-like incentives, a concept explored in educational psychology to explain varying performance in gamified learning (James Paul Gee).

Gamification illuminates how engagement mechanics intersect with skill assessment, often creating unintended selection pressures.

Key Takeaways

- Structured assessments outperform unstructured or novelty-driven techniques, meaning standardized evaluation of reasoning and problem-solving is more predictive than clever puzzles or impression-based interviews.

- Hiring puzzles can create an illusion of objectivity, yet without evidence of validation, they may measure familiarity, speed, or exposure rather than job-relevant ability.

- Predictive validity, not entertainment value, should guide assessment design, especially if organizations claim to use evidence-based hiring practices.

- Evidence-based hiring requires alignment between what is tested and what predicts performance, ensuring that evaluation methods reflect validated constructs rather than cultural or stylistic preferences.

Disclosure line: This article was developed with the assistance of AI and was subsequently reviewed, revised, and approved by our editorial team.

Like our content? Be sure to follow us